[ad_1]

We are making changes to the way we handle manipulated media on Facebook, Instagram and Threads based on feedback from the Oversight Board that we should update our approach to reflect a broader range of content that exists today and provide context about the content through labels. These changes are also informed by Meta’s policy review process that included extensive public opinion surveys and consultations with academics, civil society organizations and others.

We agree with the Oversight Board’s argument that our existing approach is too narrow since it only covers videos that are created or altered by AI to make a person appear to say something they didn’t say. Our manipulated media policy was written in 2020 when realistic AI-generated content was rare and the overarching concern was about videos. In the last four years, and particularly in the last year, people have developed other kinds of realistic AI-generated content like audio and photos, and this technology is quickly evolving. As the Board noted, it’s equally important to address manipulation that shows a person doing something they didn’t do.

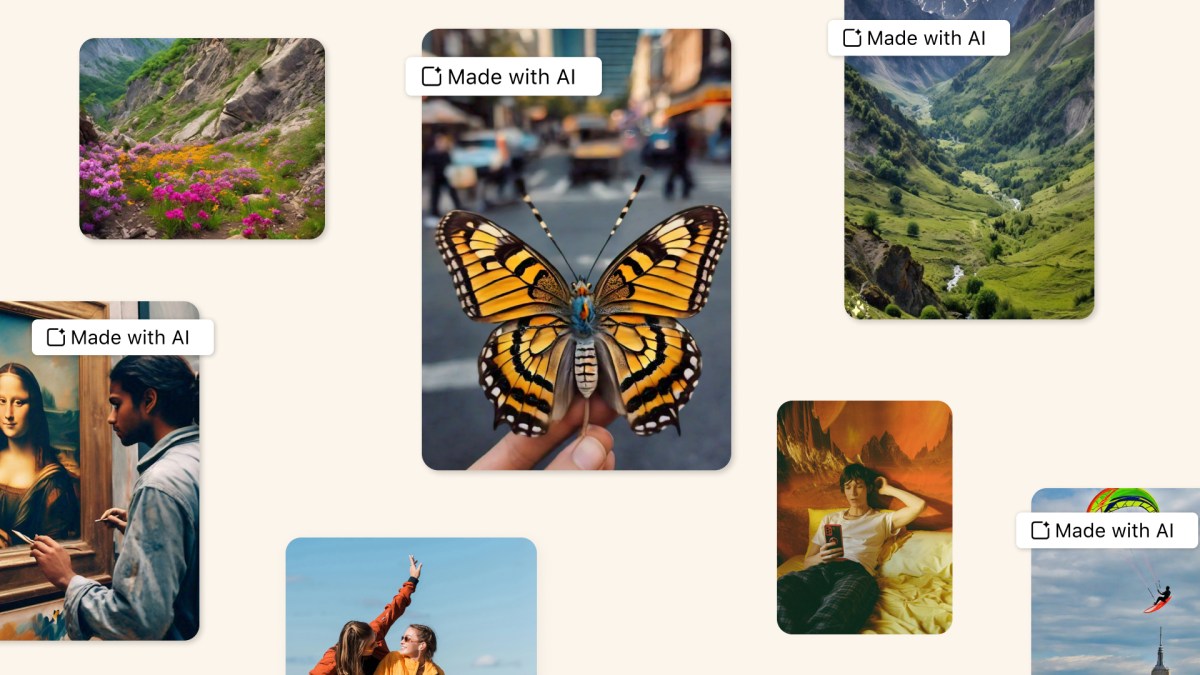

The Board also argued that we unnecessarily risk restricting freedom of expression when we remove manipulated media that does not otherwise violate our Community Standards. It recommended a “less restrictive” approach to manipulated media like labels with context. In February, we announced that we’ve been working with industry partners on common technical standards for identifying AI content, including video and audio. Our “Made with AI” labels on AI-generated video, audio and images will be based on our detection of industry-shared signals of AI images or people self-disclosing that they’re uploading AI-generated content. We already add “Imagined with AI” to photorealistic images created using our Meta AI feature.

We agree that providing transparency and additional context is now the better way to address this content. The labels will cover a broader range of content in addition to the manipulated content that the Oversight Board recommended labeling. If we determine that digitally-created or altered images, video or audio create a particularly high risk of materially deceiving the public on a matter of importance, we may add a more prominent label so people have more information and context. This overall approach gives people more information about the content so they can better assess it and so they will have context if they see the same content elsewhere.

We will keep this content on our platforms so we can add informational labels and context, unless the content otherwise violates our policies. For example, we will remove content, regardless of whether it is created by AI or a person, if it violates our policies against voter interference, bullying and harassment, violence and incitement, or any other policy in our Community Standards. We also have a network of nearly 100 independent fact-checkers who will continue to review false and misleading AI-generated content. When fact-checkers rate content as False or Altered, we show it lower in Feed so fewer people see it, and add an overlay label with additional information. In addition, we reject an ad if it contains debunked content, and since January, advertisers have to disclose when they digitally create or alter a political or social issue ad in certain cases.

We plan to start labeling AI-generated content in May 2024, and we’ll stop removing content solely on the basis of our manipulated video policy in July. This timeline gives people time to understand the self-disclosure process before we stop removing the smaller subset of manipulated media.

This slideshow requires JavaScript.

Policy Process Informed By Global Experts and Public Surveys

In Spring 2023, we began reevaluating our policies to see if we needed a new approach to keep pace with rapid advances in generative AI technologies and usage. We completed consultations with over 120 stakeholders in 34 countries in every major region of the world. Overall, we heard broad support for labeling AI-generated content and strong support for a more prominent label in high-risk scenarios. Many stakeholders were receptive to the concept of people self-disclosing content as AI-generated.

A majority of stakeholders agreed that removal should be limited to only the highest risk scenarios where content can be tied to harm, since generative AI is becoming a mainstream tool for creative expression. This aligns with the principles behind our Community Standards – that people should be free to express themselves while also remaining safe on our services.

We also conducted public opinion research with more than 23,000 respondents in 13 countries and asked people how social media companies, such as Meta, should approach AI-generated content on their platforms. A large majority (82%) favor warning labels for AI-generated content that depicts people saying things they did not say.

Additionally, the Oversight Board noted their recommendations were informed by consultations with civil-society organizations, academics, inter-governmental organizations and other experts.

Based on feedback from the Oversight Board, experts and the public, we’re taking steps we think are appropriate for platforms like ours. We want to help people know when photorealistic images have been created or edited using AI, so we’ll continue to collaborate with industry peers through forums like the Partnership on AI and remain in a dialogue with governments and civil society – and we’ll continue to review our approach as technology progresses.

[ad_2]

Source link